Manuscript submission in the old days

Picture this. You have worked on a study for a year or two. You analyze the data, write a manuscript, make the appropriate figures, revise the text until the file name has gone from StudyOfCoolThingA.wp to StudyOfCoolThingZ.wp (back then I don’t think Word yet existed; in any case, my thesis laboratory used a program called Word Perfect). You decide that your manuscript is ready to be shared. You discuss with your co-authors which is the most appropriate journal for this particular opus. Once that is decided, you format the bibliography accordingly, read through the manuscript one more time, and decide that the day has come to send it in. You write a cover letter.

Then it is on to printing. You go get a sheet or two of letterhead (actual paper with the University and Department addresses on it and often a watermark) from the Department office and print out, on the dot-matrix printer in your laboratory, your missive on said letterhead. Next you print out a copy of the manuscript, double spaced throughout with figure legends at the end. This is typically going to be 35-60 pages. So the bzz-bzz-bzz of the dot matrix lasts for a while. Once the original document has been accomplished, you are back off to the departmental offices where you use the Xerox (and I mean Xerox) machine to make 5 copies so that you will have 4 copies and the original to send to the journal and one copy to keep. You desperately hope that the Xerox machine will not run out of toner, have a paper jam, or otherwise decide to give up the ghost while doing this job.

Then it is time to deal with the figures. Each figure has to be on glossy paper that is mounted on hard cardboard placards. To mount the figures, we used an adhesive that came out of a spray can. You could layer the floor or table top with newspaper or Chux (aka blue diapers that are blue plasticky on one side and white absorbent material on the other side) or whatever but inevitably at least something in the environs got completely and disgustingly sticky. Usually this included the floor which meant that for the next couple of weeks there would be that stick-stick-release sound every time anyone walked over that spot and then for a few steps following.

The figures to be mounted on the cardboard had to be printed out on in a darkroom. This was even true for graphs and certainly it was true for photomicrographs (photographs of images taken through a microscope) and electrophysiological traces. So off you go to the darkroom. You print photomicrographs and so on. Then you have to cut each photomicrograph perfectly squarely. This requires knowing how to hold the paper in the paper cutters with the 2 foot long machetes…..er…blades just so that all of your digits remain attached. Once you have the squared off photos you use the spray adhesive to paste the photos onto cardboard backings. Then there were these letter appliqués that you transferred on to the photographs to label them A, B, C and so on. Scale bars were similarly transferred. Same for arrows and other symbols that labeled the photos. Mercifully most journals required only 3 copies of each figure, rather than the 5 copies required for the text. Two copies were sent to reviewers and one was kept at the journal office.

Once you had accumulated this roughly half-foot stack of paper and cardboard, you would go off to find an appropriately sized box. Nothing was stapled and so back then, all those bulldog clips had a reason for existing; in fact, we coveted them, stowing them away whenever we happened upon one. Each copy of the manuscript was clipped together. Then the figure placards were put together with rubber bands. The whole pack was placed into a box with enough protection to ensure the safe arrival of the figures. Then you addressed the box and carted it off to the department secretary who would mail it for you.

As you may be able to appreciate now, submitting a manuscript was a labor-intensive ordeal back in the day. It was an all-day, or at least half-day, affair that culminated in a moment that forced a pause to savor the accomplishment, the feeling. Just as a student who finishes the last final exam of a semester does not immediately start preparing for the next semester’s courses, after submitting a paper, I always wanted to take off, celebrate, or at least mark the ending with something besides diving back into the next project.

The editorial process of yore

Now let’s time travel to the journal office where boxes of manuscripts arrived each week. Back then I was not reviewing – I was a graduate student. But the gist of the process was that for each manuscript, the Editor had to find reviewers. How many? Well, two. Why? Basically, because one is not enough, two is challenging as hell and finding three for every manuscript would be impossible, surely eliciting mass editorial resignations. To get two reviewers, the Editor or his (typically; occasionally her) assistant would call up some people and hope that a couple of them would agree to review. The journal office would then send one copy of the manuscript text and figures to each reviewer.

Scientific publishing in the 21st century

Now that we have strolled down memory lane, let’s consider how peer review is conducted today. Papers are submitted electronically. Spray cans of adhesive, dot-matrix printers, physical letterhead, and the like are gone. There are now electronic hoops that you must navigate. For example, if your running title is 1 (or 13) characters over the limit, you will not be allowed to continue. The text of the abstract also has a limit, typically in the number of words. Some journals require that your total manuscript be under an upper limit of so many characters and so on. Notably, all of these length requirements reflect the scientific publishing world’s “slavish adherence to print” in the words of Randy Schekman, Nobel laureate and Editor-in-Chief of eLife. Not one of them stems from an intellectual or even literary concern.

Today, manuscript submission still takes time but definitely far less than in my graduate school days. And no paper at all is generated. Data storage needs tick up but the trees are safe from direct plundering in the name of manuscript submission.

Then, what happens on the editorial side? Well, curiously, the customs haven’t changed much. Sure, email has replaced the phone which I suspect replaced letters sometime before I got into science. But the crux of the system is to send a manuscript to two reviewers and then have the Editor decide based on these two reviews. Really? With the world wide web at our disposal, why are we still using two reviewers?? Because we only could bear sending out paper manuscripts to two reviewers back 30 years ago? Wow, what a well thought-out reason.

I don’t remember when I first reviewed a manuscript but I know it was not during graduate school. [This is unfortunate. Now there is a concerted effort to bring scientists into the reviewing fold early. This is typically done as a collaboration between a student or post-doctoral fellow and his or her mentor. Many journals have adapted to this by allowing the designated reviewer to easily credit any junior scientist who helped with the review process.] In any case, by now I have a great deal of experience serving as a reviewer and editor. Currently, I am on the Board of Reviewing Editors at eLife.

When I am assigned a manuscript, I look for 3-4 reviewers besides myself. The reason that I do this is that any one reviewer is typically not an expert in all parts of the study and even two reviewers rarely can cover the entire range of expertise needed. Articles are increasingly elaborate and involve several methodologies along with at least one conceptual framework. My view is that I would like to hear from experts in all of the methods and conceptual frameworks employed.

Can’t we trade in the horse and buggy for an electric car?

Now imagine that instead of tweaking an ancient system built on USPS constraints, you were to design the system from scratch. What would you do? Well I know what I would do. I would let the world wide web do the work. Scientific teams would upload manuscripts onto an available site and the community of scientists would comment constructively, engage in debate, and all-around help to situate the findings within an appropriate context, bring up appropriate critiques, and openly discuss the new questions raised by the work.

As fate would have it, there are public sites for preprints, meaning manuscripts that have not received peer review. The first major site of this type, arXiv, was started by physicists in the early ‘90s. More recently, scientists at Cold Spring Harbor Laboratories started a biology version of arXiv, appropriately named bioRxiv. Anyone can upload a manuscript and after a brief quality check, the manuscript is live. It is tweeted and interested people can comment on the work. In this way, one could conceivably receive tens, hundreds or even thousands of reviews rather than the traditional two.

There are many things that are right about the preprint movement and several things that still need tweaking. What is right (and here I will confine myself to bioRxiv which is what I am most familiar with):

- The most important consequence of preprints is that sceintists can get their work out in a timely fashion. As a result, science progresses apace.

- One of the most exciting pieces of the preprint movement, not yet fully realized, is the possibility to receive comments on a paper from a wide range of people. An important feature of preprint sites is the ability to upload manuscript revisions enabling authors to use the scientific community’s comments to improve the communication. A full record of all versions remains available on the site.

- Discussions can take place either on Twitter or on the bioRxiv site.

- When the manuscript is submitted, it receives a doi or digital object identifier, just as is true of peer-reviewed journal articles. This number serves as an official time stamp that the authors published this work on this day. If another group publishes later, the doi stamps are a record of the order of discovery.

- If the manuscript is subsequently submitted to a journal, goes through peer review and is published, then bioRxiv links to the journal site. The paper thereafter goes by the doi number assigned by the journal (the paper does not have two doi numbers).

The potential problems with bioRxiv are:

- The idea of scientists generously reading every interesting paper that comes up is a lovely idea but sadly, it remains just that – an idea. It does not happen often. The reason is the information overload that we all know oh so well. Every once in a while, a preprint makes a big splash. A great example of this was the Natural selection of bad science published by Paul Smaldino and Richard McElreath on arXiv on May 31, 2016. This paper attracted a great deal of deserved attention even before it was published less than 4 months later in Royal Society Open Science. This example notwithstanding, most preprints land with a resounding thud. This piece of the promise is present but still elusive at present.

- Naturally, people fear abusive comments or individuals who troll a particular scientist, group or idea. I don’t really know what to say about this except to ignore it. I suspect it will not be a large problem.

[My work and that of many others, such as Frans de Waal, strongly suggests that mammals are fundamentally helpful. From my time working in publishing ethics, it is also clear to me that scientists are sincerely doing their best to figure things out; science is hard and getting truths right is a challenge; and finally, very few scientists get up in the morning wanting to toss a wrench into the works. In sum, I am guessing that the havoc-wreaking commenters will be few and that ignoring them will be largely effective.]

One intriguing idea that was raised by a postdoctoral fellow was to find a way to give credit to scientists who write true reviews of preprints. Rewarding constructive behavior may be the best defense against obnoxious behavior. Publons is a group dedicated to crediting scientists for the reviewing and editing work that they do. Ideally, Publons could acknowledge full, thoughtful and constructive reviews of preprints just as they acknowledge reviews for journal.

If we have access to preprints, do we need “post-prints?”

Many others have written about various broken aspects of the peer review system. As detailed in an editorial in The New Atlantis from more than 10 years ago, peer review “is not synonymous with quality” control. Far from it. In an essay comparing scientific publishing to the music industry circa 1990, Steve Shea aptly laments that peer review is “so stochastic that a) Good work often gets delayed by needing to roll the dice [= submit a manuscript] many times and b) Any flawed study can overcome its shortcomings by rolling the dice enough times.” Peer review is error-prone, novelty- and risk-averse, biased towards well-known researchers, and capricious. For an amusing, and thankfully fictional, imagining of how a peer-review decision letter to Watson and Crick would read, see Ron Vale’s missive on the pace of scientific publishing. In fact, Watson and Crick’s 1953 article describing the structure of DNA was editorially (not peer-) reviewed and accepted.

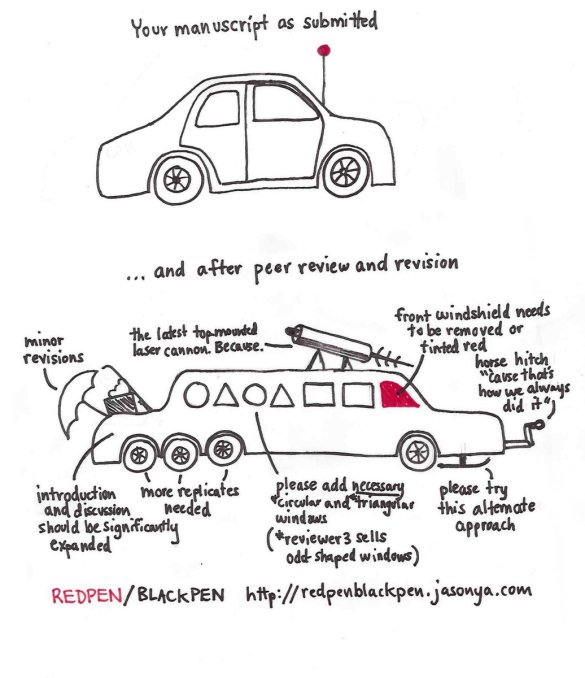

One of my pet peeves about peer review is how reviewers try to transform the author’s study into something else. Endless rounds of review attempt to change the vision of a manuscript from that of the authors to that of one or more of the reviewers (see peer-reviewed car above). The result is a confused amalgamation of perspectives that is bloated in some areas and too spare in others. I say let the authors tell their story.

Michael Eisen and Leslie Voshall call for a system of post-publication review in a white paper that has not (yet?) moved the needle as much as could be hoped. Scientific publishing has two aims: communication of findings to move scientific progress along and then assignment of credit in order to fuel the sociology of science: jobs, promotion, and funding. It is service to the latter aim that slows down the former aim – scientific progress!!! – to a snail’s pace. Eisen and Voshall call for the end of pre-publication peer review. They state, “Post-publication peer review of pre-prints is scientific peer review optimized for the Internet Age.” I agree.

Arriving at the future

I hope that understanding the origin of the two-reviewer custom helps younger scientists can appreciate the non-sensical nature of this practice. Since the review process is based on nonsense, we don’t have much to lose by at least trying a crowd-sourcing version of peer review. And if crowd-reviewing ever lives up to its potential, or even comes close, then the scientific advantage of publishing in established journals begins to evaporate. Sociological advantages of established journals are likely to remain at least for a while.

As of right now, preprints (with doi-s) can be included on NIH grants and on promotion curribculum vitae. A significant obstacle is that these scientific communications may not be afforded much weight by either grant reviewers or promotion committees. The latter group are likely to be a source of particular inertia in the system. It may be decades before promotion committees stop using idiotic metrics such as Impact Factor and start looking at and reading Open Access preprints.

I have often said that if it were not for the career aspirations of my amazing students, I would just publish findings on my blog. Now I will amend this to publishing on bioRxiv, an easy substitute for my blog and ultimately a better idea. I won’t feel sorry for the loss of for-profit scientific publishing, exemplified by the publisher behemoth Elsevier. I suspect I will feel a nostalgic pang or two for society journals such as Journal of Neurophysiology. However, there are ways for these journals to stay relevant by indexing, annotating and highlighting the preprints relevant to the society’s interest. This is no small task as the number of preprints placed on bioRxiv has been greater than 1400/month for the last couple of months, and is sure to grow in the coming years; wading through that volume of information is no easy task.

I hope the future is here and can be recognized.

This post have been copied from The brain is sooooo cool!